Originally published on May 6, 2024

Preface: The Snapdragon 8 Gen 2 Mobile Platform defines a new premium standard for connected computing. Intelligently engineered with groundbreaking AI across the board, this AI marvel enables truly extraordinary experiences.

Background: A vertex buffer object (VBO) is an OpenGL feature that provides methods for uploading vertex data (position, normal vector, color, etc.) to the video device for non-immediate-mode rendering.

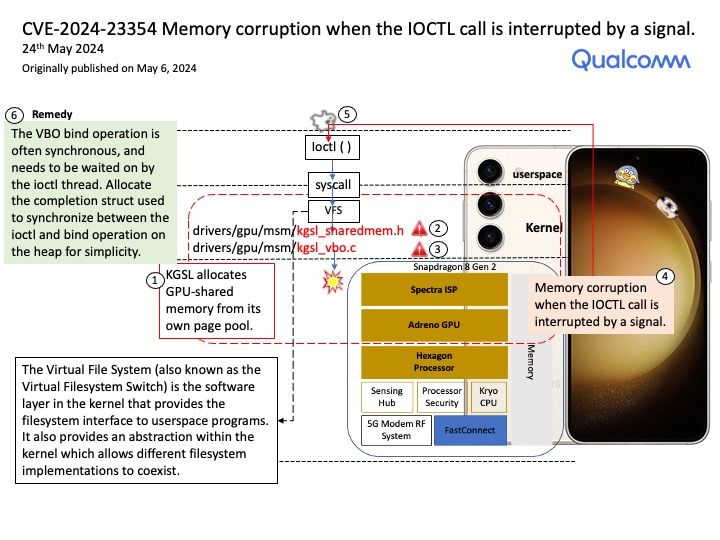

KGSL allocates GPU-shared memory from its own page pool. A VBO is a buffer of memory which the gpu can access. That’s all it is. A VAO is an object that stores vertex bindings. This means that when you call glVertexAttribPointer and friends to describe your vertex format that format information gets stored into the currently bound VAO.

Vulnerability details: Memory corruption when the IOCTL call is interrupted by a signal.

Remedy: The VBO bind operation is often synchronous, and needs to be waited on by the ioctl thread. Allocate the completion struct used to synchronize between the ioctl and bind operation on the heap for simplicity.

Official announcement: Please refer to the link for details – https://nvd.nist.gov/vuln/detail/CVE-2024-23354