Preface: Unlike OpenAI’s ChatGPT, Chat with RTX doesn’t remember the context of prompts. Asking Chat with RTX to give examples of fishes in one prompt and then asking for a description of “the fishes” in the next prompt will result in a blank – users will need to spell out everything explicitly.

Background: Chat with RTX defaults to AI startup Mistral’s open-source model but supports other text-based models, including Meta’s Llama 2, which is also open-source.

Chat with RTX is a demo app that lets you personalize a GPT large language model (LLM) connected to your own content—docs, notes, videos, or other data. Leveraging retrieval-augmented generation (RAG), TensorRT-LLM, and RTX acceleration, you can query a custom chatbot to quickly get contextually relevant answers. And because it all runs locally on your Windows RTX PC or workstation.

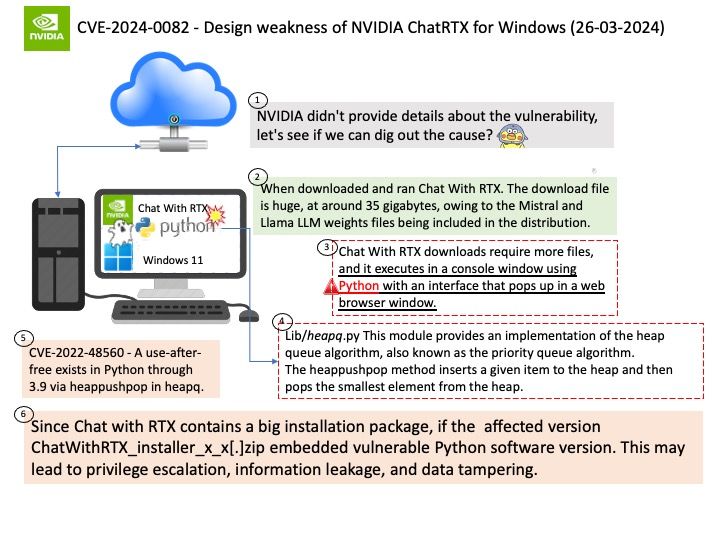

Vulnerability details: NVIDIA ChatRTX for Windows contains a vulnerability in the UI, where an attacker can cause improper privilege management by sending open file requests to the application. A successful exploit of this vulnerability might lead to local escalation of privileges, information disclosure, and data tampering.

Official announcement: Please refer to the link for details – https://nvidia.custhelp.com/app/answers/detail/a_id/5532