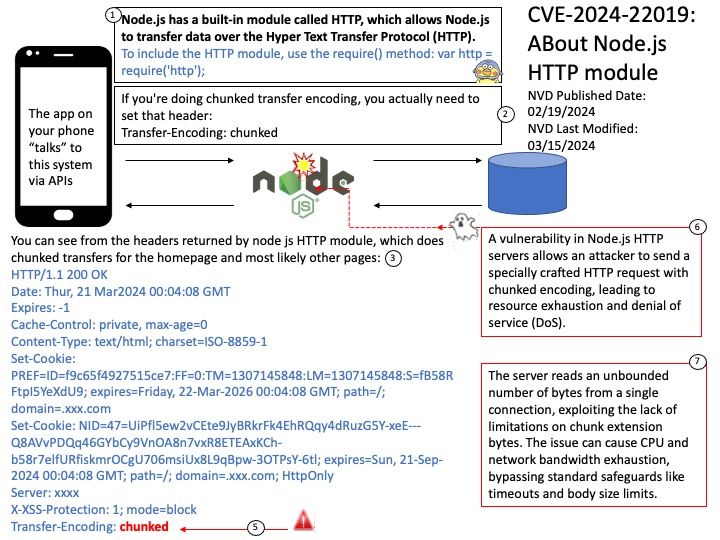

Preface: Express framework is built on top of the Node.js HTTP module and provides us, with a clean way to write the backend.

Background: The HTTP module extends two built-in classes:

Net module: Provides network API for creating stream-based TCP servers or clients.

Events module: Provides an event-driven architecture using EventEmitter class.

Ref: Chunked transfer encoding is a streaming data transfer mechanism available in Hypertext Transfer Protocol (HTTP) version 1.1, defined in RFC 9112#section-7.1. In chunked transfer encoding, the data stream is divided into a series of non-overlapping “chunks”. The chunks are sent out and received independently of one another.

Each chunk is preceded by its size in bytes. The transmission ends when a zero-length chunk is received. The chunked keyword in the Transfer-Encoding header is used to indicate chunked transfer.

Vulnerability details: A vulnerability in Node.js HTTP servers allows an attacker to send a specially crafted HTTP request with chunked encoding, leading to resource exhaustion and denial of service (DoS). The server reads an unbounded number of bytes from a single connection, exploiting the lack of limitations on chunk extension bytes. The issue can cause CPU and network bandwidth exhaustion, bypassing standard safeguards like timeouts and body size limits.

Official announcement: Please see the link below for details: