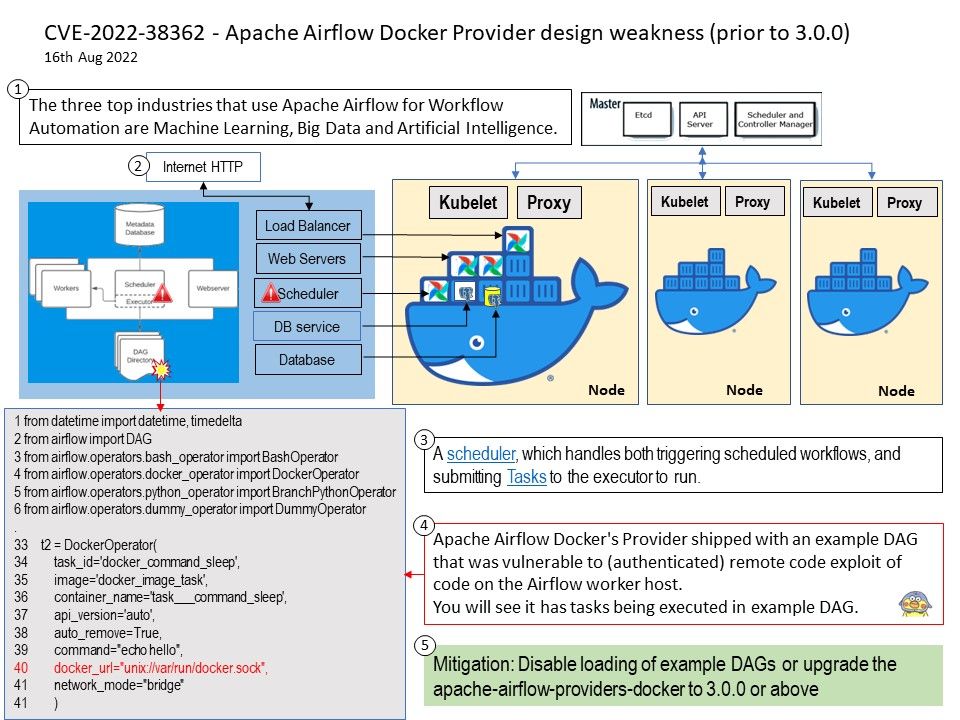

Preface: The three top industries that use Apache Airflow for Workflow Automation are Machine Learning, Big Data and Artificial Intelligence.

Background: Airflow is a platform that lets you build and run workflows. A workflow is represented as a DAG (a Directed Acyclic Graph), and contains individual pieces of work called Tasks, arranged with dependencies and data flows taken into account.

Following details is the basics of deploying Airflow inside Kubernetes. I assumed you have the following installed in your docker containers running inside Kubernetes (Postgres Container, Postgres Service, Airflow Webserver, Airflow Scheduler and Airflow LoadBalancer Service).

Below steps are the quickview according to above components.

Step 1. Get Apache Airflow Docker image.

Step 2. Deploy Postgres into Kubernetes.

Step 3. Deploy a Service for Postgres.

Step 4. Prepare Postgres database for Airflow.

Step 5. Get ready to write some YAML files.

Step 6. Deploy a LoadBalancer Service to expose Airflow UI to Internet.

Warning: Once this step is complete you will have an Airflow UI that anyone can access.

Vulnerability details: Apache Airflow Docker’s Provider prior to 3.0.0 shipped with an example DAG that was vulnerable to (authenticated) remote code exploit of code on the Airflow worker host.

Remark: A DAG is defined in a Python script, which represents the DAGs structure (tasks and their dependencies) as code.

Mitigation: Disable loading of example DAGs or upgrade the apache-airflow-providers-docker to 3.0.0 or above

Official announcement: Please refer to the link for details https://lists.apache.org/thread/614p38nf4gbk8xhvnskj9b1sqo2dknkb