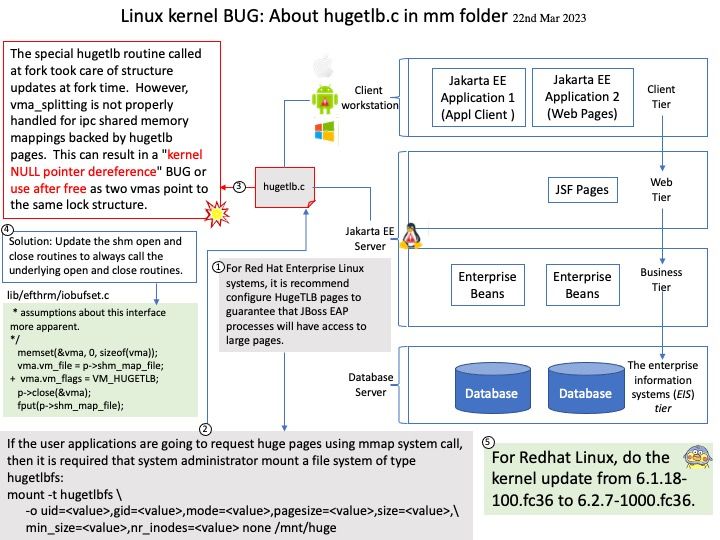

Preface: Enabling HugePages makes it possible for the operating system to support memory pages greater than the default (usually 4 KB). Using very large page sizes can improve system performance by reducing the amount of system resources required to access page table entries.

Background: For Red Hat Enterprise Linux systems, it is recommend configure HugeTLB pages to guarantee that JBoss EAP processes will have access to large pages.

Reminder: Activating large pages for JBoss EAP JVMs results in pages that are locked in memory and cannot be swapped to disk like regular memory.

Ref: Hugetlb boot command line parameter semantics hugepagesz. Specify a huge page size. Used in conjunction with hugepages parameter to preallocate a number of huge pages of the specified size. Hence, hugepagesz and hugepages are typically specified in pairs such as: hugepagesz=2M hugepages=512.

Design weakness: The special hugetlb routine called at fork took care of structure updates at fork time. However, vma_splitting is not properly handled for ipc shared memory mappings backed by hugetlb pages. This can result in a “kernel NULL pointer dereference” BUG or use after free as two vmas point to the same lock structure.

Solution: Update the shm open and close routines to always call the underlying open and close routines.

For Redhat Linux, do the kernel update from 6.1.18-100.fc36 to 6.2.7-1000.fc36.

Technical reference: A subroutine IOBUFSET is provided to craved up an arbitrarily sized storage area into perforated buffer blocks with space for 132 data bytes. The beginning and ending addresses of the buffer storage area are specified to IOBUFSET in age A- and B-registers, respectively.

.jpg)