Preface: Artificial Intelligence applied machine learning and other techniques to solve problems. Will AI impact human?

Background: You can use the Machine Learning model to get predictions on new data for which you do not know the target. For instance, AWS developing AI technology to predict cyber attack especially email spam, email phishing , etc. Amazon ML supports three types of ML models: binary classification, multiclass classification, and regression. The type of model you should choose depends on the type of target that you want to predict.

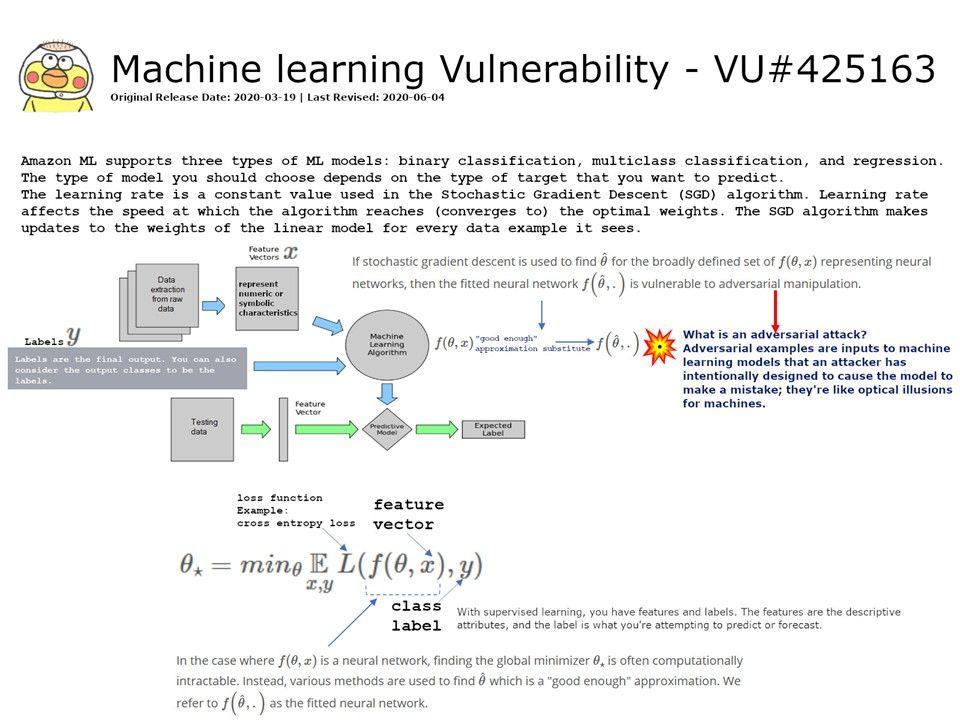

The learning rate is a constant value used in the Stochastic Gradient Descent (SGD) algorithm. If stochastic gradient descent is used to find a global minimizer, for the broadly defined set of representing neural networks, then the fitted neural network approximation will be vulnerable to adversarial manipulation.

What is an adversarial attack?

Adversarial examples are inputs to machine learning models that an attacker has intentionally designed to cause the model to make a mistake; they’re like optical illusions for machines.

Official article, please refer to following link – https://kb.cert.org/vuls/id/425163