Preface: NVIDIA Triton Inference Server, part of the NVIDIA AI platform and available with NVIDIA AI Enterprise, is open-source software that standardizes AI model deployment and execution across every workload.

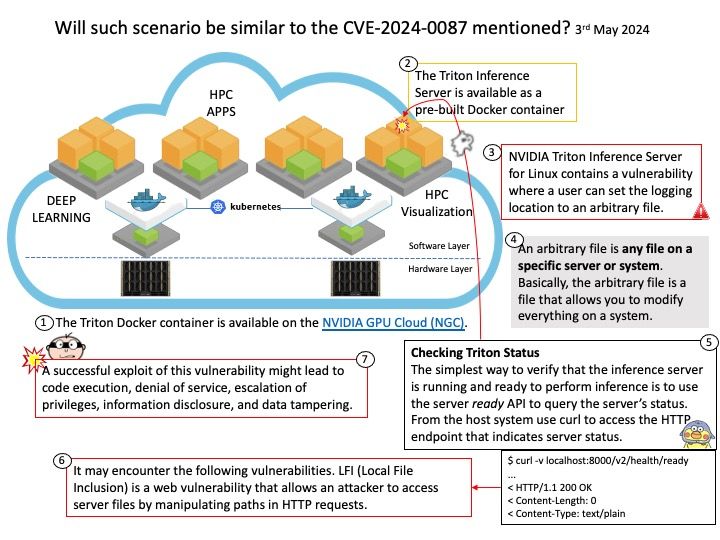

Background: The Triton Inference Server is available as a pre-built Docker container or you can build it from source.The Triton Docker container is available on the NVIDIA GPU Cloud (NGC). For best performance the Triton Inference Server should be run on a system that contains Docker, nvidia-docker, CUDA and one or more supported GPUs.

Vulnerability details: NVIDIA Triton Inference Server for Linux contains a vulnerability where a user can set the logging location to an arbitrary file. If this file exists, logs are appended to the file. A successful exploit of this vulnerability might lead to code execution, denial of service, escalation of privileges, information disclosure, and data tampering.

Ref: . LFI (Local File Inclusion) is a web vulnerability that allows an attacker to access server files by manipulating paths in HTTP requests.

Official announcement: Please refer to the link for details – https://nvidia.custhelp.com/app/answers/detail/a_id/5535