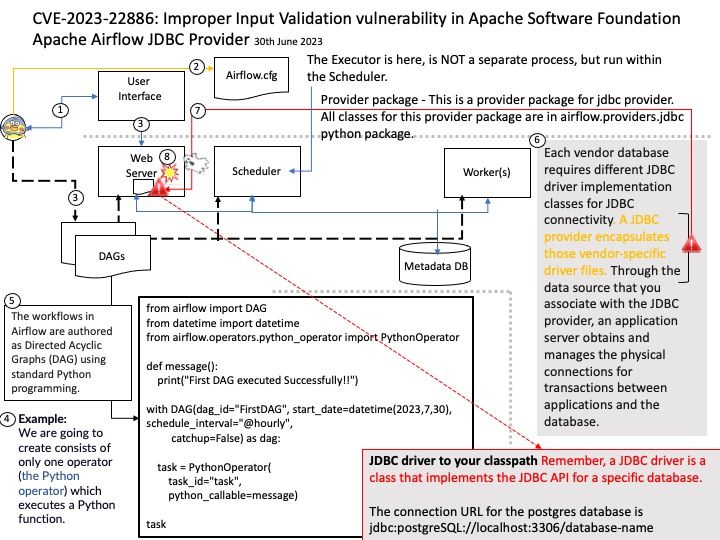

Preface: Airflow is a platform to programmatically author, schedule, and monitor workflows. Specifically, it is used in Machine Learning to create pipelines.

Background: Apache Airflow™ is an open-source platform for developing, scheduling, and monitoring batch-oriented workflows. This open-source platform most suitable for pipelines that change slowly, are related to a specific time interval, or are pre-scheduled. It’s a popular solution that many data engineers rely on for building their data pipelines. Data pipelines work with ongoing data streams in real time. It’s been used to run SQL, machine learning models, and more.

Apache Airflow is a Python-based platform to programmatically author, schedule and monitor workflows. It is well-suited to machine learning for building pipelines, managing data and training models.

You can use Apache Airflow to schedule pipelines that extract data from multiple sources, and run Spark jobs or other data transformations. Machine learning model training.

Vulnerability details: Improper Input Validation vulnerability in Apache Software Foundation Apache Airflow JDBC Provider. Airflow JDBC Provider Connection’s [Connection URL] parameters had no restrictions, which made it possible to implement RCE attacks via different type JDBC drivers, obtain airflow server permission. This issue affects Apache Airflow JDBC Provider: before 4.0.0.

Recommendation: For security purposes, you should avoid building the connection URLs based on user input. For user name and password values, use the connection property collections. Restrict direct usage of driver params via extras for JDBC connection.

Remedy: To configure driver parameters (driver path and driver class), you can use the following methods:

- Supply them as constructor arguments when instantiating the hook.

- Set the “driver_path” and/or “driver_class” parameters in the “hook_params” dictionary when creating the hook using SQL operators.

- Set the “driver_path” and/or “driver_class” extra in the connection and correspondingly enable the “allow_driver_path_in_extra” and/or “allow_driver_class_in_extra” options in the “providers[.jdbc” section of the Airflow configuration.

- Patch the “JdbcHook.default_driver_path” and/or “JdbcHook.default_driver_class” values in the “local_settings[.]py” file.

Official announcement: For details, please refer to the link – https://github.com/advisories/GHSA-mm87-c3x2-6f89